-

Full Conference

Full Conference

-

Full Conference 1-Day

Full Conference 1-Day

-

Basic Conference

Basic Conference

-

Exhibits Plus

Exhibits Plus

-

Exhibitor

Exhibitor

Date/Time: 6 December 2016, 10:00am - 06:00pm

Venue: Ballroom G+J (Experience Hall), Level 3

Location: The Venetian Macao

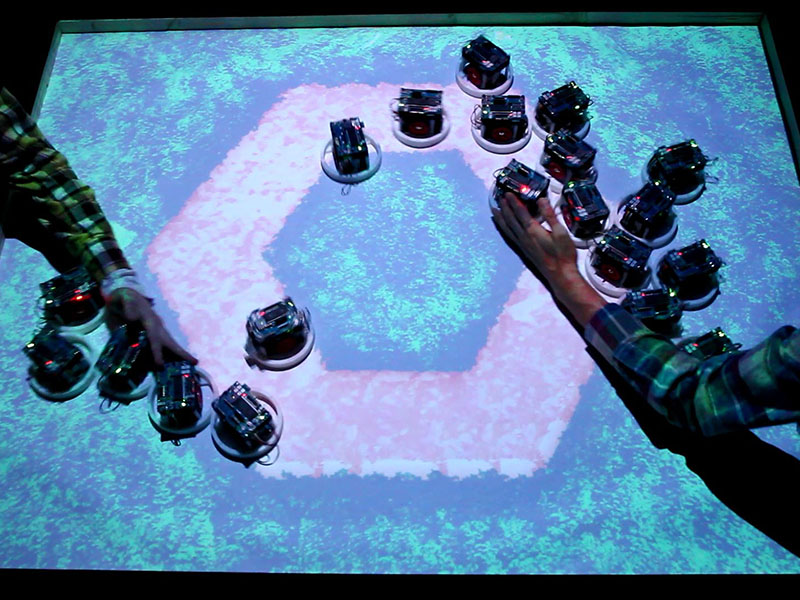

Title: Phygital Field: an Integrated Field with a Swarm of Physical Robots and Digital Images

Booth Number: et_0004

Summary: Collaboration between computer graphics and multiple robots has attracted increasing attention in the areas of human-computer interaction and computer entertainment. To preserve the seamless connection between them, we need to develop the system that can accurately determine the position and state of the robots and can control them easily and instantly. However, realizing a responsive control system for a large number of mobile robots without complex settings and at the same time avoiding the system load problem is not trivial. We solved these problems by utilizing pixel-level visual light communication (PVLC) technology that is a data communication method based on human-imperceptible high-speed flicker.

Utilizing this, we can project two types of information: visible images for humans and data patterns for mobile robots in the same location.

Our system offers two technical innovations: an initialization-free and marker-free localization and control method, a system noted for its simplicity and scalability. The former enables the robots to recognize their positions and states immediately by interpreting data patterns in pixels. The latter was implemented by designing the system such that measurement devices are not required because localization and control of the robots are realized through projection. These features are critical to improving the reconfigurability of the system. Also, the central system load does not increase if the number of robots increases since we can regard PVLC as parallel communication channels.

The idea of controlling robots by using information embedded in projected images allows users to easily design an integrated environment for the physical robots and digital images. Our findings have implications in various fields such as game environments and tangible interfaces, and we expect our proposed method to contribute to computer entertainment and user interface applications.

Presenter(s):

Takefumi Hiraki, The University of Tokyo

Shogo Fukushima, The University of Tokyo

Takeshi Naemura, The University of Tokyo